-

Citrix Cloud’s FREE Two-Factor Authentication that no one talks about!

You’ve probably heard of various 2FA solutions that you deploy for remote access in Citrix, but have you explored what Citrix Cloud itself has to offer? Surprisingly, Citrix Cloud offers a free two-factor authentication solution that often flies under the radar. In this comprehensive guide, we’re going to shed light on this hidden gem, providing…

-

Citrix Virtual Apps and Desktops – Commonly used PowerShell commands

Here’s a cheat sheet with some common commands used in Citrix Virtual Apps and Desktop deployments, along with their explanations: Remember to be careful with the SET, Remove, New, Add PowerShell commands as they can make undesirable changes if you don’t know what you are doing. Always start with a Get 🙂 Of course, start…

-

Microsoft Windows Virtual Desktops (WVD) or Citrix – The Big Question answered!

Microsoft Windows Virtual Desktops (WVD) has been making a lot of waves in the EUC industry ever since it was announced by Microsoft in September 2018. Windows Virtual Desktop (WVD) is a desktop and application virtualization solution that runs from Microsoft Azure. Unlike, Microsoft’s previous foray into the application and desktop virtualization markets in the…

-

Citrix Machine Creation Services (MCS) – Primer For On-Prem Vs Azure

Citrix Machine Creation Services (MCS) is a compelling technology these days for provisioning virtual machines quickly and easily in Citrix environments. The whole technology is built around simplicity and requires just a supported hypervisor that utilizes snapshots to create additional VMs on the fly. There isn’t much required from a supporting infrastructure point of view…

-

Configure RDP Proxy in NetScaler

The RDP Proxy functionality is provided as part of the Citrix Gateway and currently is available to all NetScaler Enterprise and Platinum customers. The following RDP Proxy features provide access to a remote desktop farm or an RDSH session host server through Citrix Gateway: Secure RDP traffic through CVPN or ICAProxy mode (without Full Tunnel).…

-

Federating AZURE with VMware Identity Manager and Office 365 as a Service

In this post, we will discuss how to go about setting up federation between Microsoft Azure, Office 365 and VMware Identity Manager. We will be using a Microsoft developer account in this demo configuration so in the real world, you will need to replace the Office account with your customer one. The blog is split…

-

Storefront Load balancing using NetScaler

It’s been a while since I wrote on my blog so let’s get straight into the post without much mucking around. This time we will discuss how to go about setting up Storefront load balancing using NetScalers. This can be configured on a standalone NetScaler or a NetScaler pair in HA. The recommendation is obviously…

-

Setting Up HDX Flash Redirection – XenApp & XenDesktop

Setting up flash redirection to work in Citrix could sometimes be a daunting task. There are a multitude of moving parts to this solution and a slight error could lead to days of troubleshooting and remediation work. I thought i will document the procedures that I followed to successfully setup Flash redirection to work on …

-

XenApp & XenDesktop 7.x – Citrix Director Load Balancing using NetScaler

Here is a quick and easy way to load balance your Citrix Director instances in a XenApp or XenDesktop environment. Below is my environment Citrix Director servers ( Controller servers in most cases) – director-1 and director-2 A NetScaler HA pair ( you can do this on a stand alone NetScaler as well) Monitors…

-

Tuning HDX policies for optimal end user performance – XenApp/XenDesktop 7.6 FP3

With the release of 7.6 feature pack 3, the default graphics delivery behavior has changed and the enhanced Thinwire Compatibility mode is not available via user policies. You will need to take into consideration about the different use cases and the importance of policy precedence to ensure the intended delivery method is used. If FrameHawk…

-

XenApp / XenDesktop 7.x – All the PowerShell cmdlets

Here is a dump of all that you can do via the PowerShell cmdlets in a XenApp /XenDesktop 7.x world. Note that the below has been taken from a XenApp 7.5 controller so there might be SDK updates in the newer releases. I also have this article here discussing how to perform certain tasks in…

-

Running a QFARM command in XenApp 7.x

As most of you are aware by now, Qfarm command doesn’t run anymore in XenApp 7.x as it is a part of old IMA architecture. With FMA, there are several ways to check the Server load, the easiest being using Citrix Studio and checking Load Index. However, there is another method to check the load…

-

Power Management of XenDesktop 7.x Delivery Groups – Prevent workloads from powering off when not in use

There are many a times when you would want to prevent XenDesktop/XenApp 7.x power management from powering off virtual machines in the catalog. If you ever wondered how to do it, here are the steps Open the PowerShell in administrative mode on the Controller server asnp citrix* Set-BrokerDesktopGroup “Delivery group Name” -AutomaticPowerOnForAssignedDuringPeak $True if the…

-

Citrix License Usage Monitoring using PowerShell

The below PowerShell script could be used to deliver automated emails on the Citrix License usage for Citrix Admins. Where do you run this script from? Citrix License Server How do you run this? The script could be saved as a file with an extension of .ps1 Also ensure that you have a folder called…

-

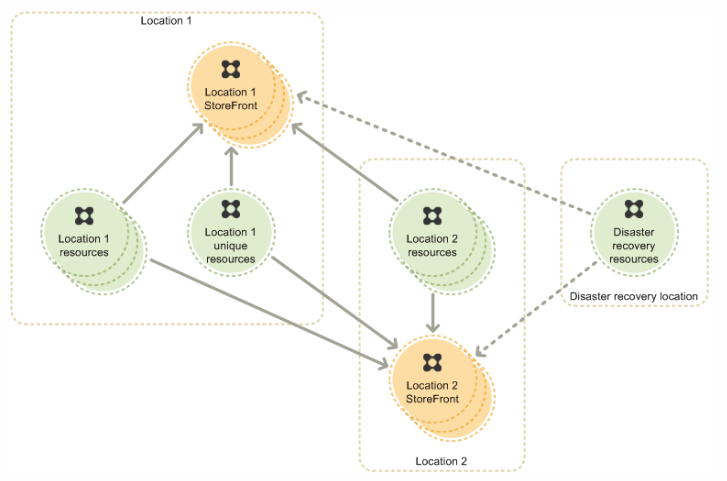

Storefront Multi-Site and High Availability – Guidelines for an Active-Active datacenter design

I am currently working on a XenDesktop 7.6 project that is designed to span 2 datacenters, Auckland and Sydney. One of the critical customer requirement is to redirect the user connections to their primary site regardless of their location first and failover to secondary site if the primary site is down. They also have a…

-

Configuring NetScaler for audit logging

In today’s security-conscious environment, maintaining comprehensive audit logs and integrating them with Security Information and Event Management (SIEM) systems is crucial. Citrix NetScaler (formerly known as Citrix ADC) provides robust syslog capabilities that can be leveraged to enhance your organization’s security posture and compliance efforts. Understanding NetScaler’s Audit Logging Capabilities NetScaler generates various types of…